Table of Content

In the early stages of the language model boom, performance benchmarks and architecture innovations stole the spotlight. Researchers compared model accuracy, developers debated fine-tuning strategies, and headlines touted breakthroughs in reasoning. But in 2026, as generative AI becomes increasingly operationalized, a new bottleneck is demanding attention: cost per token.

Most teams deploying language models are no longer concerned with just “Can we build it?” The more pressing question is, “Can we afford to scale it?” Whether you’re shipping a customer-facing agent or supporting R&D workflows, understanding how providers charge per token is no longer a backend detail—it’s a core component of infrastructure design.

At Silicon Data, we’ve studied token pricing patterns across commercial APIs and self-hosted deployments to offer clarity in an increasingly fragmented market. This guide distills what matters most in 2026—from how tokens are counted to how they’re priced—and how to think strategically about efficiency.

What Are Tokens, Really?

At a high level, tokens are fragments of text used as input and output for language models. They are not words, but smaller subword units—typically around four characters long in English. The phrase *“language models are useful”*becomes about six tokens, depending on the tokenizer used.

Importantly, tokenization isn’t standardized across providers. Different models use different encoding schemes—OpenAI’s GPT models rely on the tiktoken library, while Anthropic and Google use proprietary schemes. The same text can yield significantly different token counts across platforms. For example, a prompt that registers as 140 tokens in GPT-4 may exceed 180 tokens in Claude or Gemini.

Since providers bill based on token volume, these variations have cost implications. Misunderstanding how your model tokenizes prompts can result in severe underestimation of your infrastructure spend.

Provider | Model | Context | Input | Output | Output:Input |

|---|---|---|---|---|---|

OpenAI | GPT 5.2 | 400K | $1.75 | $14.00 | 8.0× |

OpenAI | GPT 5.2 Pro | 400K | $21.00 | $168.00 | 8.0× |

OpenAI | GPT 4o (20241120) | 128K | $2.50 | $10.00 | 4.0× |

OpenAI | GPT 4o Mini | 128K | $0.15 | $0.60 | 4.0× |

OpenAI | GPT 4.1 | 1050K | $2.00 | $8.00 | 4.0× |

Anthropic | Claude Sonnet 4 | 200K | $3.00 | $15.00 | 5.0× |

Anthropic | Claude Opus 4 | 200K | $15.00 | $75.00 | 5.0× |

DeepSeek | DeepSeek V3.2 | 164K | $0.27–$0.28 (median $0.27) | $0.40–$0.42 (median $0.42) | 1.6× |

DeepSeek | DeepSeek R1 (20250120) | 164K | $0.50–$0.70 (median $0.60) | $2.18–$2.50 (median $2.34) | 3.9× |

Meta | Llama 4 Maverick | 1049K | $0.20–$0.63 (median $0.27) | $0.60–$1.80 (median $0.85) | 3.1× |

A Few Takeaways from This Dataset

To make this more tangible, let’s frame these figures around a typical support ticket workload—roughly 3,150 input tokens and 400 output tokens per request.

With that assumption:

GPT‑4o Mini stands out as the most cost-effective option, delivering complete ticket handling for under $10 per 10,000 tickets—a great fit for low-stakes or high-volume applications.

GPT‑5.2 Pro, while technically advanced, is prohibitively expensive for large-scale use—costing more than 10× the price of GPT‑5.2, and over 100× that of GPT‑4o Mini for the exact same workload.

Claude Sonnet 4 offers a pragmatic middle ground, balancing performance and cost, making it suitable for enterprises with more demanding quality needs without jumping into premium-tier pricing.

And critically, GPT‑4o’s output pricing has jumped to $10 per million tokens. That makes the 400-token response alone cost ~$0.004 per ticket, a cost factor that can no longer be ignored at scale.

What does this mean in practice?

If your support system processes 10,000 tickets per day, switching from GPT-5.2 Pro to GPT-4o Mini could reduce costs from $1,300+ per day to just $7 — a 190× reduction. Of course, that assumes the smaller model can maintain acceptable performance for your use case, but the economic incentives are clear.

And these numbers don’t even account for indirect overhead. Adding a logging layer that stores full prompts and completions can double token consumption. Poorly tuned retrieval that fetches too many chunks — say, 10 instead of 2 — can inflate inputs by 3–4×, especially in long-context models. Even subtle configuration choices, like overlapping context windows or excessive padding in prompts, have meaningful cost impact at scale.

In short: it’s not just the model that matters—it’s the prompt shape, the retrieval logic, and the downstream integration. Monitoring and optimizing these parameters is now a core part of cost engineering in LLM-powered systems.

The Three Token Types That Matter in 2026

While the industry once referred to “tokens” as a single unit, the marketplace has matured into three distinct billing categories: input tokens, output tokens, and the emerging concept of reasoning tokens.

Input tokens are the text you send to the model, such as a user prompt or a batch of documents retrieved via a RAG pipeline. These are typically processed in parallel, making them the cheapest to compute. In contrast, output tokens—representing the model’s generated response—must be produced sequentially, one token at a time. This sequential nature requires more GPU time and, as a result, incurs higher costs.

The third category—reasoning tokens—has emerged with newer models like GPT-5.2 and Anthropic’s Claude 4 Opus. These represent internal “thinking” tokens that don’t show up in the user-facing response but are essential to multi-step reasoning, scratchpad calculations, or function-calling logic. They’re typically priced on par with output tokens or higher.

Understanding which token type dominates your workload is key. A documentation generator will have high output token costs, while a RAG search assistant may burn through input tokens by retrieving and injecting long contexts. More advanced planning involves estimating the ratio of inputs to outputs and matching it to the optimal pricing model.

2026 Pricing Benchmarks Across Leading Providers

In January 2026, we compiled a snapshot of token pricing across major API providers. The data shows a clear stratification in the market: lightweight models optimized for throughput and cost efficiency on one end, and premium reasoning models with sharply higher output costs on the other.

One of the most important patterns is the input-to-output pricing gap. Across nearly all leading models, output tokens are priced significantly higher than input tokens. In fact, the median output-to-input ratio in our dataset is approximately 4×, with some premium models reaching 8×.

For example, OpenAI’s GPT‑4o is priced at $2.50 per million input tokens and $10.00 per million output tokens, reflecting a 4× multiplier. GPT‑4o Mini follows the same structure at a much lower absolute price point, charging $0.15 input and $0.60 output per million tokens.

At the high end, OpenAI’s GPT‑5.2 Pro illustrates how quickly costs escalate for premium models. While its input tokens cost $21 per million, output tokens jump to $168 per million, an 8× multiplier that makes long or verbose responses extremely expensive at scale.

Anthropic’s Claude family shows a similar pattern. Claude Sonnet 4 charges $3.00 per million input tokens and $15.00 per million output tokens, while Claude Opus 4 reaches $15 input and $75 output, reinforcing the trend that output generation dominates inference cost.

Newer and more cost-aggressive entrants, such as DeepSeek and Meta, narrow this gap somewhat. DeepSeek V3.2 shows an output-to-input ratio closer to 1.6×, while Meta’s Llama 4 Maverick sits around 3×. These models are designed for high-throughput workloads where output volume matters more than deep reasoning.

Context window size adds another dimension to pricing. Models like GPT‑4.1 and Llama 4 Maverick support context windows exceeding one million tokens, but larger windows do not inherently raise per-token prices. Instead, they increase the risk of runaway costs if prompts or retrieved context are not tightly controlled.

Understanding Real-World Token Spend

Token pricing often seems deceptively low. A single LLM call might cost less than a penny, which feels trivial—until you multiply it across tens of thousands of interactions. When deployed in real-world applications like customer support, retrieval-augmented generation (RAG), or analytics, token inefficiencies compound rapidly, driving up operating costs in subtle but significant ways.

Let’s ground this in a concrete example: a support ticket automation system powered by an LLM. Every ticket involves a standard workflow:

A system prompt that defines the agent persona and workflow logic (500 tokens)

Retrieved documents or chunks from the knowledge base (2,500 tokens)

The user’s support message (150 tokens)

And the model’s response (400 tokens)

That’s 3,150 input tokens and 400 output tokens — 3,550 total tokens per ticket. Let’s break that down across several popular models based on published 2026 pricing:

Provider | Model | Cost / Ticket (USD) | Cost for 10,000 Tickets |

|---|---|---|---|

OpenAI | GPT-5.2 | $0.0111 | $111.13 |

OpenAI | GPT-5.2 Pro | $0.1334 | $1,333.50 |

OpenAI | GPT-4o | $0.0119 | $118.75 |

OpenAI | GPT-4o Mini | $0.0007 | $7.13 |

Anthropic | Claude 4 Sonnet | $0.0155 | $154.50 |

Strategies for Reducing Token Costs Without Sacrificing Performance

As LLMs become more deeply embedded into production systems, token efficiency is no longer an optimization — it’s a business requirement. For teams deploying at scale, even fractional savings per interaction translate into thousands of dollars in monthly spend. The good news: major gains are often possible without switching models or sacrificing quality.

The first and fastest win is often in the retrieval layer. Retrieval-augmented generation (RAG) pipelines can be noisy by default. Teams routinely pass 4–8 long documents into a prompt when only a snippet or paragraph would do. Instead, setting tighter caps — for example, limiting retrieval to 2–3 shorter chunks or aggressively truncating irrelevant sections — can cut input tokens by more than half with no loss in precision.

Another overlooked opportunity lies in conversation history management. In multi-turn interactions, it’s common to replay the entire dialogue thread on every call. This is expensive and often unnecessary. By summarizing earlier turns or preserving only the last 2–3 interactions, teams can maintain context while cutting thousands of tokens per session.

Even the system prompt — usually written once and forgotten — is a major cost driver. It’s not uncommon to find 800-token prompts repeating instructions, duplicating policy lines, or embedding full examples. Reducing these to concise, single-purpose directives can shave 30–50% off baseline input usage without degrading results.

Controlling the output side matters too. One effective technique is to budget the response length. For example, prompts can request “an answer in 3 short bullets” or “under 100 tokens.” When combined with enforced max token limits in the API call, this helps rein in unpredictable verbosity, especially in summarization or explanation tasks.

At the orchestration layer, many teams are now implementing intelligent routing strategies. Instead of sending every task to a large, expensive model like GPT-5.2, they default to a smaller model such as GPT-4o-mini. Only if confidence thresholds are not met—or if the request is particularly complex—does the system escalate to the higher-tier model. This waterfall-style approach often handles 70–80% of traffic at a fraction of the cost, with no visible quality drop for end users.

For tasks that aren’t latency‑sensitive, batch processing can unlock significant cost savings. Many API providers — including OpenAI — support batch or asynchronous endpoints that process large groups of requests together at roughly half the standard token pricing for both input and output tokens. This is possible because batching improves compute efficiency and allows providers to utilize spare capacity over a 24‑hour window, making it ideal for background workloads like bulk document tagging, report generation, or offline data enrichment.

Finally, one of the simplest and most powerful levers is caching static content. System prompts, reusable instructions, boilerplate messages, and even common context passages can all be stored and reused without repeated re-tokenization. It’s easy to implement and often gets overlooked.

Strategic Model Mixing: Why Routing Makes Financial Sense

In production environments, most teams don’t need the world’s most powerful model for every query. Instead, they’re increasingly using intelligent routing layers that match each request to the least expensive model capable of delivering an acceptable answer. Done right, this strategy can cut LLM spend by 60–90% without sacrificing output quality for the majority of users.

Let’s break it down with an example.

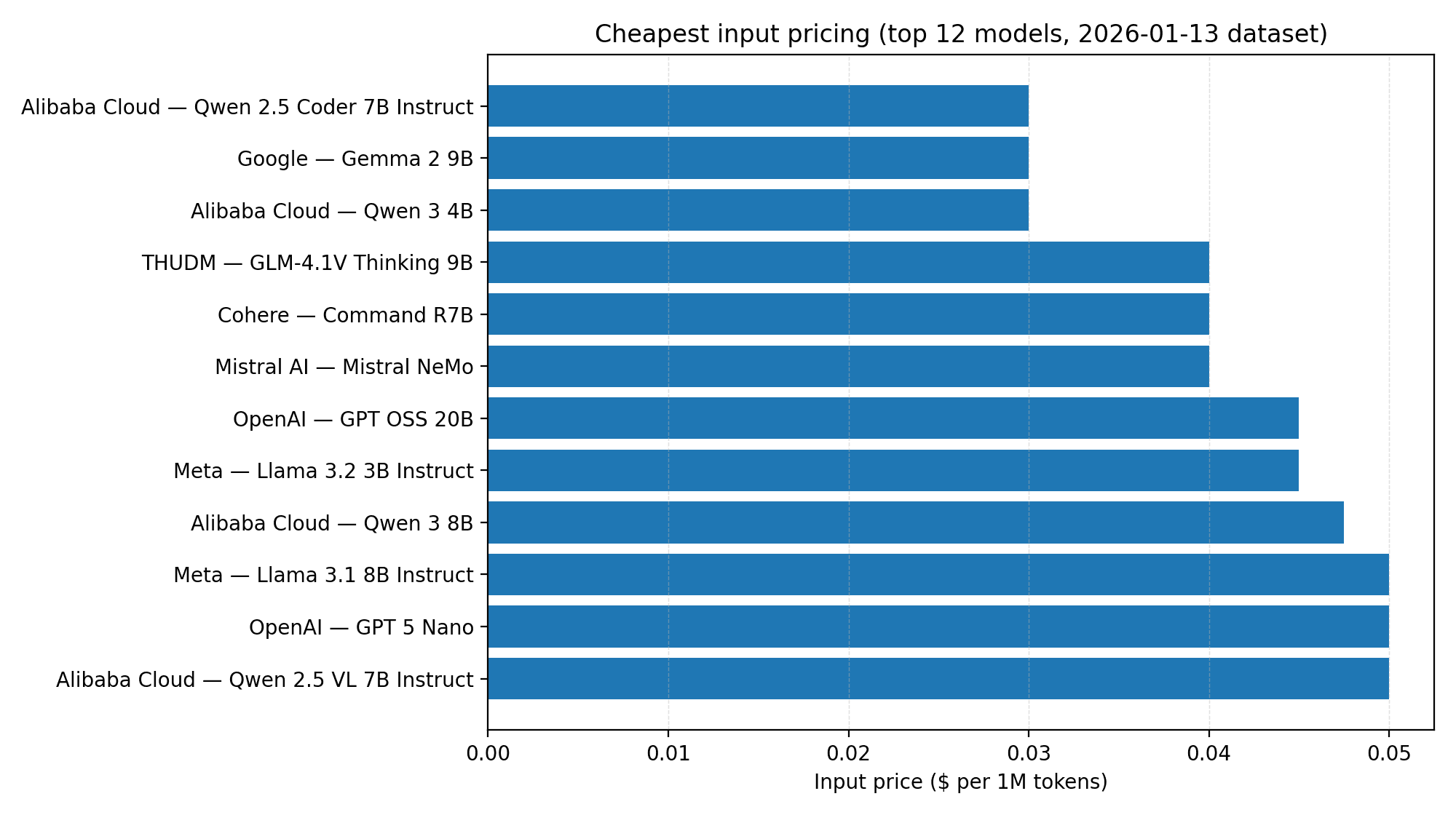

Imagine a typical support interaction that includes 2,000 input tokens and generates 400 output tokens. With that shape, you’re using 2.4K tokens per request. Now scale that to 1 million requests, and model costs start to diverge sharply.

At the low end, something like Qwen 3 4B from Alibaba Cloud might cost only $72 for a million requests. Mid-range models like LLaMA 3.1 8B Instruct come in around $124. But if you go all-in on GPT-4o, you’re looking at $9,000. And at the top end, Claude Opus 4 and GPT-5.2 Pro can cost as much as $60,000 and $109,200, respectively.

These figures highlight just how important routing strategy can be.

Smart Routing, Real Savings

Let’s say you route 60% of your traffic to GPT-4o Mini and only send 40% to Claude Sonnet 4 for more complex queries. That configuration brings your cost down to around $5,124 per million requests—a 57% savings compared to using Claude Sonnet 4 alone.

Take it further. If you route 90% of requests to GPT-4o Mini and only 10% to Claude Sonnet 4, your cost drops to about $1,686 per million—an 86% savings.

Now imagine a true edge-case strategy, where 99% of traffic goes to GPT-4o Mini and just 1% hits GPT-5.2 Pro. Even with that single percent priced at over $100,000 per million requests, your overall cost still comes out to just $1,626.60—a staggering 98.5% cheaper than running every request through GPT-5.2 Pro.

Model | Input/Output ($/1M) | Cost per 1M requests (2k in / 400 out) |

|---|---|---|

Alibaba Cloud — Qwen 3 4B | $0.03 / $0.03 | $72 |

Meta — Llama 3.1 8B Instruct (median) | $0.05 / $0.06 | $124 |

OpenAI — GPT 4o Mini | $0.15 / $0.60 | $540 |

DeepSeek — DeepSeek V3.2 | $0.27 / $0.42 | $708 |

OpenAI — GPT 4.1 | $2.00 / $8.00 | $7,200 |

OpenAI — GPT 4o (20241120) | $2.50 / $10.00 | $9,000 |

Anthropic — Claude Sonnet 4 | $3.00 / $15.00 | $12,000 |

Anthropic — Claude Opus 4 | $15.00 / $75.00 | $60,000 |

OpenAI — GPT 5.2 Pro | $21.00 / $168.00 | $109,200 |

What Are Tokens, Really?

At a high level, tokens are fragments of text used as input and output for language models. They are not words, but smaller subword units—typically around four characters long in English. The phrase *“language models are useful”*becomes about six tokens, depending on the tokenizer used.

Importantly, tokenization isn’t standardized across providers. Different models use different encoding schemes—OpenAI’s GPT models rely on the tiktoken library, while Anthropic and Google use proprietary schemes. The same text can yield significantly different token counts across platforms. For example, a prompt that registers as 140 tokens in GPT-4 may exceed 180 tokens in Claude or Gemini.

Since providers bill based on token volume, these variations have cost implications. Misunderstanding how your model tokenizes prompts can result in severe underestimation of your infrastructure spend.

Provider | Model | Context | Input | Output | Output:Input |

|---|---|---|---|---|---|

OpenAI | GPT 5.2 | 400K | $1.75 | $14.00 | 8.0× |

OpenAI | GPT 5.2 Pro | 400K | $21.00 | $168.00 | 8.0× |

OpenAI | GPT 4o (20241120) | 128K | $2.50 | $10.00 | 4.0× |

OpenAI | GPT 4o Mini | 128K | $0.15 | $0.60 | 4.0× |

OpenAI | GPT 4.1 | 1050K | $2.00 | $8.00 | 4.0× |

Anthropic | Claude Sonnet 4 | 200K | $3.00 | $15.00 | 5.0× |

Anthropic | Claude Opus 4 | 200K | $15.00 | $75.00 | 5.0× |

DeepSeek | DeepSeek V3.2 | 164K | $0.27–$0.28 (median $0.27) | $0.40–$0.42 (median $0.42) | 1.6× |

DeepSeek | DeepSeek R1 (20250120) | 164K | $0.50–$0.70 (median $0.60) | $2.18–$2.50 (median $2.34) | 3.9× |

Meta | Llama 4 Maverick | 1049K | $0.20–$0.63 (median $0.27) | $0.60–$1.80 (median $0.85) | 3.1× |

A Few Takeaways from This Dataset

To make this more tangible, let’s frame these figures around a typical support ticket workload—roughly 3,150 input tokens and 400 output tokens per request.

With that assumption:

GPT‑4o Mini stands out as the most cost-effective option, delivering complete ticket handling for under $10 per 10,000 tickets—a great fit for low-stakes or high-volume applications.

GPT‑5.2 Pro, while technically advanced, is prohibitively expensive for large-scale use—costing more than 10× the price of GPT‑5.2, and over 100× that of GPT‑4o Mini for the exact same workload.

Claude Sonnet 4 offers a pragmatic middle ground, balancing performance and cost, making it suitable for enterprises with more demanding quality needs without jumping into premium-tier pricing.

And critically, GPT‑4o’s output pricing has jumped to $10 per million tokens. That makes the 400-token response alone cost ~$0.004 per ticket, a cost factor that can no longer be ignored at scale.

What does this mean in practice?

If your support system processes 10,000 tickets per day, switching from GPT-5.2 Pro to GPT-4o Mini could reduce costs from $1,300+ per day to just $7 — a 190× reduction. Of course, that assumes the smaller model can maintain acceptable performance for your use case, but the economic incentives are clear.

And these numbers don’t even account for indirect overhead. Adding a logging layer that stores full prompts and completions can double token consumption. Poorly tuned retrieval that fetches too many chunks — say, 10 instead of 2 — can inflate inputs by 3–4×, especially in long-context models. Even subtle configuration choices, like overlapping context windows or excessive padding in prompts, have meaningful cost impact at scale.

In short: it’s not just the model that matters—it’s the prompt shape, the retrieval logic, and the downstream integration. Monitoring and optimizing these parameters is now a core part of cost engineering in LLM-powered systems.

The Three Token Types That Matter in 2026

While the industry once referred to “tokens” as a single unit, the marketplace has matured into three distinct billing categories: input tokens, output tokens, and the emerging concept of reasoning tokens.

Input tokens are the text you send to the model, such as a user prompt or a batch of documents retrieved via a RAG pipeline. These are typically processed in parallel, making them the cheapest to compute. In contrast, output tokens—representing the model’s generated response—must be produced sequentially, one token at a time. This sequential nature requires more GPU time and, as a result, incurs higher costs.

The third category—reasoning tokens—has emerged with newer models like GPT-5.2 and Anthropic’s Claude 4 Opus. These represent internal “thinking” tokens that don’t show up in the user-facing response but are essential to multi-step reasoning, scratchpad calculations, or function-calling logic. They’re typically priced on par with output tokens or higher.

Understanding which token type dominates your workload is key. A documentation generator will have high output token costs, while a RAG search assistant may burn through input tokens by retrieving and injecting long contexts. More advanced planning involves estimating the ratio of inputs to outputs and matching it to the optimal pricing model.

2026 Pricing Benchmarks Across Leading Providers

In January 2026, we compiled a snapshot of token pricing across major API providers. The data shows a clear stratification in the market: lightweight models optimized for throughput and cost efficiency on one end, and premium reasoning models with sharply higher output costs on the other.

One of the most important patterns is the input-to-output pricing gap. Across nearly all leading models, output tokens are priced significantly higher than input tokens. In fact, the median output-to-input ratio in our dataset is approximately 4×, with some premium models reaching 8×.

For example, OpenAI’s GPT‑4o is priced at $2.50 per million input tokens and $10.00 per million output tokens, reflecting a 4× multiplier. GPT‑4o Mini follows the same structure at a much lower absolute price point, charging $0.15 input and $0.60 output per million tokens.

At the high end, OpenAI’s GPT‑5.2 Pro illustrates how quickly costs escalate for premium models. While its input tokens cost $21 per million, output tokens jump to $168 per million, an 8× multiplier that makes long or verbose responses extremely expensive at scale.

Anthropic’s Claude family shows a similar pattern. Claude Sonnet 4 charges $3.00 per million input tokens and $15.00 per million output tokens, while Claude Opus 4 reaches $15 input and $75 output, reinforcing the trend that output generation dominates inference cost.

Newer and more cost-aggressive entrants, such as DeepSeek and Meta, narrow this gap somewhat. DeepSeek V3.2 shows an output-to-input ratio closer to 1.6×, while Meta’s Llama 4 Maverick sits around 3×. These models are designed for high-throughput workloads where output volume matters more than deep reasoning.

Context window size adds another dimension to pricing. Models like GPT‑4.1 and Llama 4 Maverick support context windows exceeding one million tokens, but larger windows do not inherently raise per-token prices. Instead, they increase the risk of runaway costs if prompts or retrieved context are not tightly controlled.

Understanding Real-World Token Spend

Token pricing often seems deceptively low. A single LLM call might cost less than a penny, which feels trivial—until you multiply it across tens of thousands of interactions. When deployed in real-world applications like customer support, retrieval-augmented generation (RAG), or analytics, token inefficiencies compound rapidly, driving up operating costs in subtle but significant ways.

Let’s ground this in a concrete example: a support ticket automation system powered by an LLM. Every ticket involves a standard workflow:

A system prompt that defines the agent persona and workflow logic (500 tokens)

Retrieved documents or chunks from the knowledge base (2,500 tokens)

The user’s support message (150 tokens)

And the model’s response (400 tokens)

That’s 3,150 input tokens and 400 output tokens — 3,550 total tokens per ticket. Let’s break that down across several popular models based on published 2026 pricing:

Provider | Model | Cost / Ticket (USD) | Cost for 10,000 Tickets |

|---|---|---|---|

OpenAI | GPT-5.2 | $0.0111 | $111.13 |

OpenAI | GPT-5.2 Pro | $0.1334 | $1,333.50 |

OpenAI | GPT-4o | $0.0119 | $118.75 |

OpenAI | GPT-4o Mini | $0.0007 | $7.13 |

Anthropic | Claude 4 Sonnet | $0.0155 | $154.50 |

Strategies for Reducing Token Costs Without Sacrificing Performance

As LLMs become more deeply embedded into production systems, token efficiency is no longer an optimization — it’s a business requirement. For teams deploying at scale, even fractional savings per interaction translate into thousands of dollars in monthly spend. The good news: major gains are often possible without switching models or sacrificing quality.

The first and fastest win is often in the retrieval layer. Retrieval-augmented generation (RAG) pipelines can be noisy by default. Teams routinely pass 4–8 long documents into a prompt when only a snippet or paragraph would do. Instead, setting tighter caps — for example, limiting retrieval to 2–3 shorter chunks or aggressively truncating irrelevant sections — can cut input tokens by more than half with no loss in precision.

Another overlooked opportunity lies in conversation history management. In multi-turn interactions, it’s common to replay the entire dialogue thread on every call. This is expensive and often unnecessary. By summarizing earlier turns or preserving only the last 2–3 interactions, teams can maintain context while cutting thousands of tokens per session.

Even the system prompt — usually written once and forgotten — is a major cost driver. It’s not uncommon to find 800-token prompts repeating instructions, duplicating policy lines, or embedding full examples. Reducing these to concise, single-purpose directives can shave 30–50% off baseline input usage without degrading results.

Controlling the output side matters too. One effective technique is to budget the response length. For example, prompts can request “an answer in 3 short bullets” or “under 100 tokens.” When combined with enforced max token limits in the API call, this helps rein in unpredictable verbosity, especially in summarization or explanation tasks.

At the orchestration layer, many teams are now implementing intelligent routing strategies. Instead of sending every task to a large, expensive model like GPT-5.2, they default to a smaller model such as GPT-4o-mini. Only if confidence thresholds are not met—or if the request is particularly complex—does the system escalate to the higher-tier model. This waterfall-style approach often handles 70–80% of traffic at a fraction of the cost, with no visible quality drop for end users.

For tasks that aren’t latency‑sensitive, batch processing can unlock significant cost savings. Many API providers — including OpenAI — support batch or asynchronous endpoints that process large groups of requests together at roughly half the standard token pricing for both input and output tokens. This is possible because batching improves compute efficiency and allows providers to utilize spare capacity over a 24‑hour window, making it ideal for background workloads like bulk document tagging, report generation, or offline data enrichment.

Finally, one of the simplest and most powerful levers is caching static content. System prompts, reusable instructions, boilerplate messages, and even common context passages can all be stored and reused without repeated re-tokenization. It’s easy to implement and often gets overlooked.

Strategic Model Mixing: Why Routing Makes Financial Sense

In production environments, most teams don’t need the world’s most powerful model for every query. Instead, they’re increasingly using intelligent routing layers that match each request to the least expensive model capable of delivering an acceptable answer. Done right, this strategy can cut LLM spend by 60–90% without sacrificing output quality for the majority of users.

Let’s break it down with an example.

Imagine a typical support interaction that includes 2,000 input tokens and generates 400 output tokens. With that shape, you’re using 2.4K tokens per request. Now scale that to 1 million requests, and model costs start to diverge sharply.

At the low end, something like Qwen 3 4B from Alibaba Cloud might cost only $72 for a million requests. Mid-range models like LLaMA 3.1 8B Instruct come in around $124. But if you go all-in on GPT-4o, you’re looking at $9,000. And at the top end, Claude Opus 4 and GPT-5.2 Pro can cost as much as $60,000 and $109,200, respectively.

These figures highlight just how important routing strategy can be.

Smart Routing, Real Savings

Let’s say you route 60% of your traffic to GPT-4o Mini and only send 40% to Claude Sonnet 4 for more complex queries. That configuration brings your cost down to around $5,124 per million requests—a 57% savings compared to using Claude Sonnet 4 alone.

Take it further. If you route 90% of requests to GPT-4o Mini and only 10% to Claude Sonnet 4, your cost drops to about $1,686 per million—an 86% savings.

Now imagine a true edge-case strategy, where 99% of traffic goes to GPT-4o Mini and just 1% hits GPT-5.2 Pro. Even with that single percent priced at over $100,000 per million requests, your overall cost still comes out to just $1,626.60—a staggering 98.5% cheaper than running every request through GPT-5.2 Pro.

Model | Input/Output ($/1M) | Cost per 1M requests (2k in / 400 out) |

|---|---|---|

Alibaba Cloud — Qwen 3 4B | $0.03 / $0.03 | $72 |

Meta — Llama 3.1 8B Instruct (median) | $0.05 / $0.06 | $124 |

OpenAI — GPT 4o Mini | $0.15 / $0.60 | $540 |

DeepSeek — DeepSeek V3.2 | $0.27 / $0.42 | $708 |

OpenAI — GPT 4.1 | $2.00 / $8.00 | $7,200 |

OpenAI — GPT 4o (20241120) | $2.50 / $10.00 | $9,000 |

Anthropic — Claude Sonnet 4 | $3.00 / $15.00 | $12,000 |

Anthropic — Claude Opus 4 | $15.00 / $75.00 | $60,000 |

OpenAI — GPT 5.2 Pro | $21.00 / $168.00 | $109,200 |

What Actually Drives Inference Cost?

Token pricing might look simple on the surface — a few cents per million tokens, split into input and output — but under the hood, those prices reflect a complex blend of infrastructure economics, model architecture, and product strategy. While publicly available datasets don’t directly expose GPU-level details, the observed pricing patterns strongly align with known cost drivers in LLM inference.

Model Size and Architecture

Unsurprisingly, larger and more capable models tend to cost more per token. It’s not just about the number of parameters, but also the underlying architecture. For instance, models that use attention-efficient mechanisms or sparsity-aware layers may have lower inference costs per token, even if they’re relatively large. Conversely, general-purpose models with broader capabilities often carry higher price tags due to more demanding hardware requirements and memory footprints.

GPT-5.2 Pro, for example, likely runs on high-bandwidth multi-GPU configurations with architectural optimizations for accuracy over speed. That’s reflected in its $21/$168 per million token pricing — the highest in our snapshot.

Output Token Multipliers

One of the most consistent pricing asymmetries is between input and output tokens. Across the board, output tokens are significantly more expensive, often 3–8× the rate of input tokens. In the 2026 market data, the median output-to-input price ratio is around 4×.

This makes sense from a computational standpoint: generating tokens autoregressively — particularly with beam search, temperature sampling, and alignment layers — consumes more GPU time and memory than merely embedding inputs.

From a financial perspective, this means lengthy completions can balloon costs even when inputs are small. It also explains why high-throughput systems (like summarizers or content generators) need especially strict output budgeting.

Context Window Size

Long-context models like Claude 4 or GPT-4.1 (with 1M+ token capacity) don’t necessarily charge more just because they can handle more tokens. In fact, GPT-4.1 supports 1,050K context tokens at relatively moderate per-token rates.

But there’s a trap here: long context windows create the temptation to over-prompt, leading to bloated input sizes and unnecessary cost. Retrieval systems, in particular, often pass 10K+ tokens into a model because they can — not because it’s effective. The takeaway: the context window doesn’t drive price, but what you do with it certainly does.

Infrastructure-Level Levers: Batching, Caching, and Routing

Prompt-level optimization (rewriting system messages, trimming user input) can help — but system-level architecture makes a far bigger difference.

Batching reduces overhead and increases GPU utilization. OpenAI’s batch endpoint, for example, offers bulk inference at roughly 50% of real-time token costs.

Caching prevents repeated tokenization and embedding of static components, like system prompts or repeated context blocks.

Routing, as covered earlier, lets teams segment requests across models based on complexity — sending simple tasks to GPT-4o-mini and escalating only when needed.

Combined, these techniques can reduce total inference cost by 5–10×, outperforming anything achievable through micro-tuning prompt copy alone.

What’s Next in Token Billing?

The future of token pricing will likely shift away from simple per-token billing toward hybrid and performance-based models.

Several providers are already testing subscription models with overage tiers. Others are introducing billing by action, not tokens—charging based on the outcome, like successful classification or intent detection. There’s also growing interest in off-peak pricing, where token rates are discounted during non-peak hours, encouraging teams to queue low-priority workloads overnight.

Window-based billing is also evolving. Some providers offer discounts for cached inputs—if the prompt has been seen before, you’re charged only a fraction. This could make reranking systems and session-aware agents far cheaper in the long run.

What Actually Drives Inference Cost?

Token pricing might look simple on the surface — a few cents per million tokens, split into input and output — but under the hood, those prices reflect a complex blend of infrastructure economics, model architecture, and product strategy. While publicly available datasets don’t directly expose GPU-level details, the observed pricing patterns strongly align with known cost drivers in LLM inference.

Model Size and Architecture

Unsurprisingly, larger and more capable models tend to cost more per token. It’s not just about the number of parameters, but also the underlying architecture. For instance, models that use attention-efficient mechanisms or sparsity-aware layers may have lower inference costs per token, even if they’re relatively large. Conversely, general-purpose models with broader capabilities often carry higher price tags due to more demanding hardware requirements and memory footprints.

GPT-5.2 Pro, for example, likely runs on high-bandwidth multi-GPU configurations with architectural optimizations for accuracy over speed. That’s reflected in its $21/$168 per million token pricing — the highest in our snapshot.

Output Token Multipliers

One of the most consistent pricing asymmetries is between input and output tokens. Across the board, output tokens are significantly more expensive, often 3–8× the rate of input tokens. In the 2026 market data, the median output-to-input price ratio is around 4×.

This makes sense from a computational standpoint: generating tokens autoregressively — particularly with beam search, temperature sampling, and alignment layers — consumes more GPU time and memory than merely embedding inputs.

From a financial perspective, this means lengthy completions can balloon costs even when inputs are small. It also explains why high-throughput systems (like summarizers or content generators) need especially strict output budgeting.

Context Window Size

Long-context models like Claude 4 or GPT-4.1 (with 1M+ token capacity) don’t necessarily charge more just because they can handle more tokens. In fact, GPT-4.1 supports 1,050K context tokens at relatively moderate per-token rates.

But there’s a trap here: long context windows create the temptation to over-prompt, leading to bloated input sizes and unnecessary cost. Retrieval systems, in particular, often pass 10K+ tokens into a model because they can — not because it’s effective. The takeaway: the context window doesn’t drive price, but what you do with it certainly does.

Infrastructure-Level Levers: Batching, Caching, and Routing

Prompt-level optimization (rewriting system messages, trimming user input) can help — but system-level architecture makes a far bigger difference.

Batching reduces overhead and increases GPU utilization. OpenAI’s batch endpoint, for example, offers bulk inference at roughly 50% of real-time token costs.

Caching prevents repeated tokenization and embedding of static components, like system prompts or repeated context blocks.

Routing, as covered earlier, lets teams segment requests across models based on complexity — sending simple tasks to GPT-4o-mini and escalating only when needed.

Combined, these techniques can reduce total inference cost by 5–10×, outperforming anything achievable through micro-tuning prompt copy alone.

What’s Next in Token Billing?

The future of token pricing will likely shift away from simple per-token billing toward hybrid and performance-based models.

Several providers are already testing subscription models with overage tiers. Others are introducing billing by action, not tokens—charging based on the outcome, like successful classification or intent detection. There’s also growing interest in off-peak pricing, where token rates are discounted during non-peak hours, encouraging teams to queue low-priority workloads overnight.

Window-based billing is also evolving. Some providers offer discounts for cached inputs—if the prompt has been seen before, you’re charged only a fraction. This could make reranking systems and session-aware agents far cheaper in the long run.

Written by

Carmen Li

Founder at Silicon Data

Share this story

Articles you may like